IETS Using Neuromorphic Cameras

Inceptive Event Time-Surfaces (IETS) for Object Classification using Neuromorphic Cameras

Introduction

A standard image sensor is comprised of an array of Active Pixel Sensors (APS). Each APS circuit reports the pixel intensity of the image formed at the focal plane by cycling between a period of integration (wherein photons are collected and counted by each pixel detector) and a readout period (where digital counts are combined from all pixels to form a single frame). Motion detected and estimated across frames has useful applications in computer vision tasks. Unfortunately, detecting fast moving objects can be challenging due to the limitations of the integration and read out circuit. Object motion that is too fast relative to the integration period induces blurring and other artifacts. Additionally, since all pixels have a single exposure setting, parts of the scene may be underexposed while other parts are saturated. Both of these issues degrade the image quality of the captured video frames, reducing our ability to detect or recognize objects by their shapes or their motions. While high-speed cameras with very fast frame rates can resolve blur issues, they are expensive, consume lots of power, generate large amounts of data, and require adjusting exposure settings.

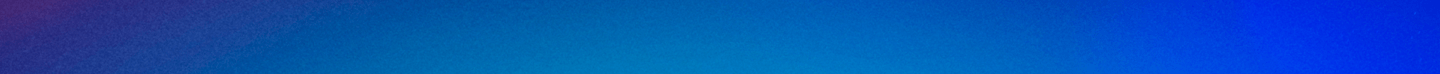

Figure 1. Event Generation. (a) On a per pixel level, intensity variations trigger events at each log-scaled level crossing. The first event in a series of consecutive events is called an Inceptive Event. (b) Time-Surface generation in the presence of noise.

Figure 1. Event Generation. (a) On a per pixel level, intensity variations trigger events at each log-scaled level crossing. The first event in a series of consecutive events is called an Inceptive Event. (b) Time-Surface generation in the presence of noise.

Event-based cameras were engineered to overcome these limitations of the APS circuitry found on conventional framing cameras. As described below, these neuromorphically inspired cameras can operate at extremely high temporal resolution (>800kHz), low latency (20 microseconds), wide dynamic range (>120dB), and low power (30mW). They report only changes in the pixel intensity, requiring a new set of techniques to perform basic image processing and computer vision tasks---examples include optical flow, feature extraction, gesture recognition, and object recognition.

Time-surface is one such technique with proven usefulness in pattern recognition by encoding the event-time as an intensity. However, time-surfaces are sensitive to noise and to multiple events corresponding to the same image edge with some latency when the intensity changes are large. Both have an effect on time-surfaces similar to the ways that blurring affects APS data. In this work, we propose IETS, aimed at extracting noise-robust, low-latency features that correspond to complex object edge contours over a temporal window. IETS extends prior methods to achieve higher object recognition accuracy while removing over 70% of time-surface events. We verify the effectiveness of our object classification framework on multiple datasets.

Goals/Objectives

- Improve dimensionality reduction for Neuromorphic Vision Cameras

- Remove noisy events and increase robustness to outliers for a wide-range of applications

- Advance state-of-the-art in object classification for event-based sensors

Methodology

To advance object classification using event data, we propose a novel concept called Inceptive Event Time-Surfaces (IETS). IETS is an extension of previous methods aimed at improving dimensionality reduction and noise robustness. IETS retains features critical to object classification (i.e. corners and edges) by fitting time-surfaces to a subset of events. Unlike previous approaches that focused on generating handcrafted features from noisy event data, IETS uses deep convolutional neural networks (CNNs) to learn features from time-surface images with less noise. As demonstrated by the experiments using the N-CARS (open dataset), IETS combined with CNNs achieves a new state-of-the-art in classification performance.

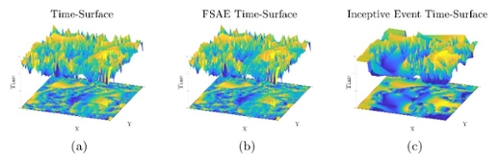

Figure 2. Time-Surface Visualization. (a) Noisy 2D time-surface (bottom) compiled from ∼17k events represented as a 3D mesh (above) (b) Same visualization constructed from subset of ∼8k FSAE events. (c) Same visualization constructed from subset of ∼3k IETS events. IETS shows significantly less noise in time-surface, representing meaningful image features better than the unfiltered sensor events or FSAE events.

Figure 2. Time-Surface Visualization. (a) Noisy 2D time-surface (bottom) compiled from ∼17k events represented as a 3D mesh (above) (b) Same visualization constructed from subset of ∼8k FSAE events. (c) Same visualization constructed from subset of ∼3k IETS events. IETS shows significantly less noise in time-surface, representing meaningful image features better than the unfiltered sensor events or FSAE events.

Results/Evaluations

The N-CARS dataset is a large, real-world, event-based, public dataset for car classification. It is composed of 12,336 car samples and 11,693 non-cars samples (background). The camera was mounted behind the windshield of a car and gives a view similar to what the driver would see. Each sample contains exactly 100 milliseconds of data with 500 to 59,249 events per sample.

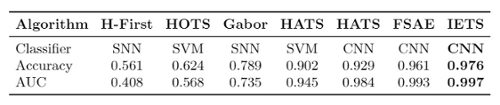

Each N-CARS sample was processed into an image using IETS. Algorithm evaluation was accomplished via the standard metrics of accuracy rate and Area Under Curve (AUC). The maximum score was produced after augmenting the training data by using IETS images that had also been flipped. The maximum accuracy score obtained by IETS was 0.973. Comparison to other state-of-the-art algorithms is shown in the following table, and is a considerable improvement over the HATS published score of 0.902. AUC also improved from 0.945 to 0.997. To ensure performance gains were not entirely from replacing the Support Vector Machine (SVM) with a CNN, HATS features were used to train the same GoogLeNet architecture. These results are also included as HATS/CNN. Additionally, to show the improvement IETS offers in generating a time-surface, FSAE images were used to train the architecture and are also included for comparison.

Table 1. Classification results on N-CARS.

Future Work

Overall, there are a wide range of future applications for event-based sensors due to their speed, size, low memory requirements, and high dynamic range. This work presents an algorithm that improves state-of-the-art performance for object classification of cars. As classification rates near 100% for the N-CARS, the lack of large labeled datasets will limit advancement in this area. Multiple simulators now exist for generating synthetic data, which have been used successfully in several papers for testing. Although these simulators may be useful in the short term, real-world data is always preferred as noise, calibration, and manufacturing defects are challenging to reliably simulate.

Two limitations of IETS should be addressed with future work. First, IETS relies on the fact that edges triggering events rarely generate large, overlapping time-surfaces within 100 milliseconds. This may not be true for all scenarios. For example, a spinning fan, pulsing light, or very fast moving object would generate overlapping surfaces and likely limit the utility of IETS in these cases. The IETS algorithm currently averages overlapping surfaces, but this is not optimal as these unique signatures are undetectable to a standard camera. Second, after the time-surfaces are generated from IEs, no effort is made to recover data originally filtered as noise. A two-stage filter design will help recover events and allow for a broader application of the algorithm.