Breast Cancer Classification

Breast Cancer Classification from Histopathological Images with Inception Recurrent Residual Convolutional Neural Network

Introduction

The Deep Convolutional Neural Network (DCNN) is one of the most powerful and successful deep learning approaches. DCNNs have already provided superior performance in different modalities of medical imaging including breast cancer classification, segmentation, and detection. Breast cancer is one of the most common and dangerous cancers impacting women worldwide. We have proposed a method for breast cancer classification with the Inception Recurrent Residual Convolutional Neural Network (IRRCNN) model. The IRRCNN is a powerful DCNN model that combines the strength of the Inception Network (Inception-v4), the Residual Network (ResNet), and the Recurrent Convolutional Neural Network (RCNN). The IRRCNN shows superior performance against equivalent Inception Networks, Residual Networks, and RCNNs for object recognition tasks. The IRRCNN approach is applied for breast cancer classification on two publicly available datasets including BreakHis and Breast Cancer Classification Challenge 2015. The experimental results are compared against the existing machine learning and deep learning-based approaches with respect to image-based, patch-based, image-level, and patient-level classification. The IRRCNN model provides superior classification performance in terms of sensitivity, Area Under Curve (AUC), the ROC curve, and global accuracy compared to existing approaches for both datasets.

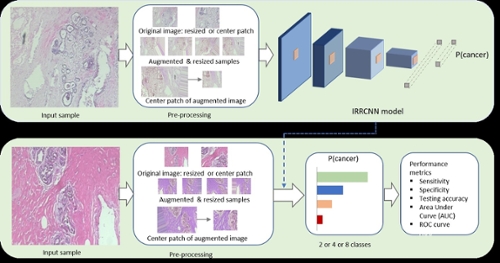

Figure 1. Implementation diagram for breast cancer recognition using the IRRCNN model. The upper part of this figure shows the steps that are used for training the system, and the lower part of this figure displays the testing phase where the trained model is used. These results are evaluated with a number of different performance metrics.

Goals/Objectives

- Successful magnification factor invariant binary and multi-class breast cancer classification using the IRRCNN model.

- Experiments on publicly available datasets for breast cancer histopathology (BreaKHis and Breast Cancer Classification Challenge 2015 datasets).

Methodology

The Inception Recurrent Residual Convolutional Neural Network (IRRCNN) is an improved hybrid DCNN architecture based on inception, residual networks, and the RCNN architecture. The main advantage of this model is that it provides better recognition performance using the same number or fewer network parameters when compared to alternative equivalent deep learning approaches including inception, the RCNN, and the residual network. In this model, the inception-residual units are utilized with respect to the Inception-v4 model. For model details: https://link.springer.com/article/10.1007/s00521-018-3627-6

Results

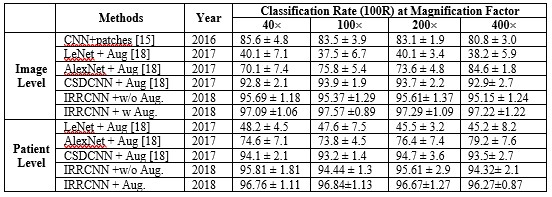

In this work we achieved 97.95±1.07% and 97.65±1.20% testing accuracy for benign and malignant BC classification for image and patient level analysis. Therefore, we have achieved a 1.05% and 0.55% improvement in average performance against the highest accuracies reported for image and patient level analysis in recently published paper [1]. Furthermore, our proposed IRRCNN model produced testing accuracies of 97.57±0.89% and 96.84±1.13% for multi-class BC classification at the image level and patient level respectively. These results are a 3.67% and 2.14% improvement of average recognition accuracy compared to the latest reported performance in recently published paper [1]. The experimental results compared against recently published deep learning and machine learning approaches and showed that our proposed model provides superior performance when compared to the existing algorithms for breast cancer classification.

Table 1. Breast cancer classification results for multi-class (8 classes) using the BreakHis dataset.

Reference

[1] https://link.springer.com/article/10.1007/s10278-019-00182-7